- We are creating components of sofware infrastructure capable of supporting interactions among people, spaces and mobile devices on scales ranging from single rooms to inter-continental multi-person collaborations.

- Software infrastructure for human-centric pervasive computing:

- Collaboration support is the main target application area where we apply and evaluate our technology. We recognize that collaboration happens in scheduled formal meetings as well as spontaneously during chance encounters. Finally, some of the work in groups happens off-line when people contribute comments, opinions and information asynchronously.

- Novel Human-Computer Interfaces:

- We are developing and evaluating new interfaces for people to interact with their environments.For more information e-mail AIRE-Contact.

Collaboration Support |

Infrastructure |

Human-Computer Interfaces | ||||

| Algorithms for visual

arrangement of information

|

|

|||||

|

|

|||||

|

||||||

A nice description of your project goes here. Feel free to include links to additional resources

As our technology expands to cover more places and people, it becomes increasingly difficult to write applications that effectively use large numbers of diverse resources. For our purposes, resource management involves both selecting appropriate resources for a given application, and allocating these scarce resources among applications. Existing resource managers fall short of fulfilling these needs on a large scale. As such, we are working on a new system that operates more effectively, especially with multiple users and locations. This system takes a more decision theoretic approach to resource request arbitration.

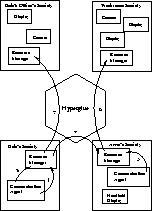

We are building a new level of discovery and communication services

that will allow Metaglue-enabled spaces to interact with one another.

This new infrastructure layer, called Hyperglue, will also allow

software running on behalf of people, groups of people, institutions

and information sources to become part of the global communication

network.

Design of Hyperglue is based on two main concepts: delegation and

high-level service discovery. If we imagine a scenario, where

Bob wishes to communicate with Anne (not knowing her location), then

Bob's personal communication agent will first contact Anne's

communication agent through Hyperglue. The two agents will negotiate

and decide if the communication request should be granted and what

modes of communication are permitted. Then both Bob's and Anne's

personal agents need to negotiate with their surrounding environments

for the use of necessary resources.

The devices that occupy the Intelligent Room define the boundaries of its functionality. While computers obviously play a major role, less complex devices extend user interaction beyond the reach of traditional computing environments. The Intelligent Room incorporates many computer-controlled devices including lights, audio equipment, projectors, sensors, and cervos. My work involves standardizing the way the state information of these devices is handled. This convention will allow developers to easily add support for novel devices.

Contact Tyler Horton with questions about this project.

In collaboration with the CORE group in MIT's Laboratory for Computer Science, we are developing a Metaglue-CORE gateway. The goal of this gateway is to provide a way for Metaglue to explicitly define connections between devices in e21. CORE is a new device interconnect system that delegates connection control to third party agents. The strength of this system is its ability to take unspecific inputs and a list of rules and rout messages to their destinations. In particular, CORE lets e21handle media streams easily. The Metaglue-CORE gateway will allow e21 to use CORE to easily manipulate both audio and video streams making it possible to create and display multi-modal media presentations.

We are working currently on deploying simple sensors throughout our instrumented spaces. In addition to speech, vision and graphical interfaces, these sensors would provide us with simple yet robust information about motion in a space, noise and light levels, pressure on such surfaces as the floor and furniture. These sensors will help us better judge the state of the interactions in a space as well as provide feedback about the state of some of the space's physical resources such as lights, speakers, drapes controllers, etc.

There are two components to this project: firstly, we need to deploy the sensors in a way that makes them accessible from any of the computers controlling the space. Secondly, information from numerous sensors needs to be combined to provide a high-level picture of the state of the interactions in a space.

SAM is an HCI micro-project aimed at developing an expressive and responsive user interface agent for e21. Currently, SAM consists of a minimal reprsentation of an animated face, which conveys emotional states such as confusion, surprise, worry, and anger.

SAM is one of the output modalities of a larger project, which is to make e21 affective and emotion- enabled. Inspired by the humanness of HAL in 2001: A Space Odyssey , this project aims to make the Intelligent Room both responsive to user affect (curiosity, frustration, etc), and expressive of its own state in emotional terms. These emotions would be expressed by SAM, as well as through spoken inflection in TTS output.

In collaboration with the Vision Interface Project (VIP) we have developed a new interface for engaging the speech recognition capabilities of the environment into our activities, such as meetings. Look-To-Talk (LTT) interface (see paper) involves an animated avatar representing the room, and head-pose tracking. The environment gets ready to engage in a spoken interaction with the user if the user faces the avatar. If, instead, the user faces other people in the space, the speech recognition system of the environment remains inactive thus preventing accidental response to utterances that are really directed at other humans and not at the environment. Currently, further work is planned on rigorous evaluation of LTT and on further improvements to the system.

O2Flow is a metaglue package for capturing, multicasting, and rendering realtime audio and video media streams. O2Flow maintains its own MBONE-compatible multicast sessions and offers multicast directory lookup services to all metaglue agents. O2Flow relies on Sun's Java Media Framework, and requires full JMF-compatibility (currently available on Win32 or Linux).

Next steps include creating distributed media storage repositories for media archiving, indexing, and retrieval, and integrating O2Flow with the eFacilitator for meeting capture.

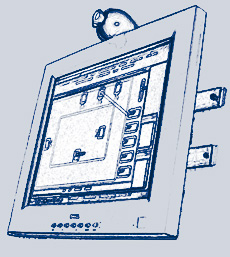

ki/o extends intelligent environments like e21 into transitional spaces and informal gathering areas such as hallways, break areas, and elevator lobbys.

To accomplish this, ki/o embeds metaglue-agent augmented devices in these spaces along with touch-sensitive, peceptually enabled kiosk interfaces.

These kiosks grant direct access to users' agent societies via context-sensitive multimodal UIs, and provide situated services to the locations where it they are installed, such as the capture of nearby unplanned informal meetings.

Please see the ki/o working group site for more information.

This goal of this project is to integrate speech into the existing sketching system. This work is intended to create a multimodal environment in which mutual disambiguation of the input modes will help identify the user's intentions. In the future, the infrastructure in the Intelligent Room could add other modalities that would assist in the recognition of the users intentions. While sketching and talking, users also gesture at parts of the drawing to identify certain parts of the sketch or to indicate actions. The Intelligent Room could provide this data which would aid in the identification of the user's intentions and in disambiguation.

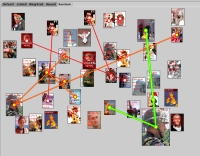

The goal of this work, done in collaboration with the EWall Project, is to provide arrangement algorithms of existing knowledge to inspire alternative ways of understanding by the use of visual and conception connections between separate Information Objects through observing how users select and organize presented information, further related inquires, and developments. Surveys and evaluations of existing information arrangement algorithms and visualization tools have been done along with suggestions of new additions and modifications. A design and a prototype consist of a few primitive arrangements are built for testing and comparison purposes. Future work will be focused on developments of more complex algorithms and testing. The algorithms will be used to present a database as well as to visualize and analyze results of user tests from other projects.

Planlet is a generic software layer for representing plans of user's tasks. Planlet makes it easier to build task-aware ubiquitous computing (ubicomp) applications by providing generic services to hold and manipulate knowledge about user plans, habits and needs. The knowledge embedded in Planlet can be used by ubicomp applications to reduce the task overhead and user distractions. For instance, applications can proactively remind the user to perform planned task steps; they can automatically configure the user's working environment; and they can guide the user in following best-known practices.

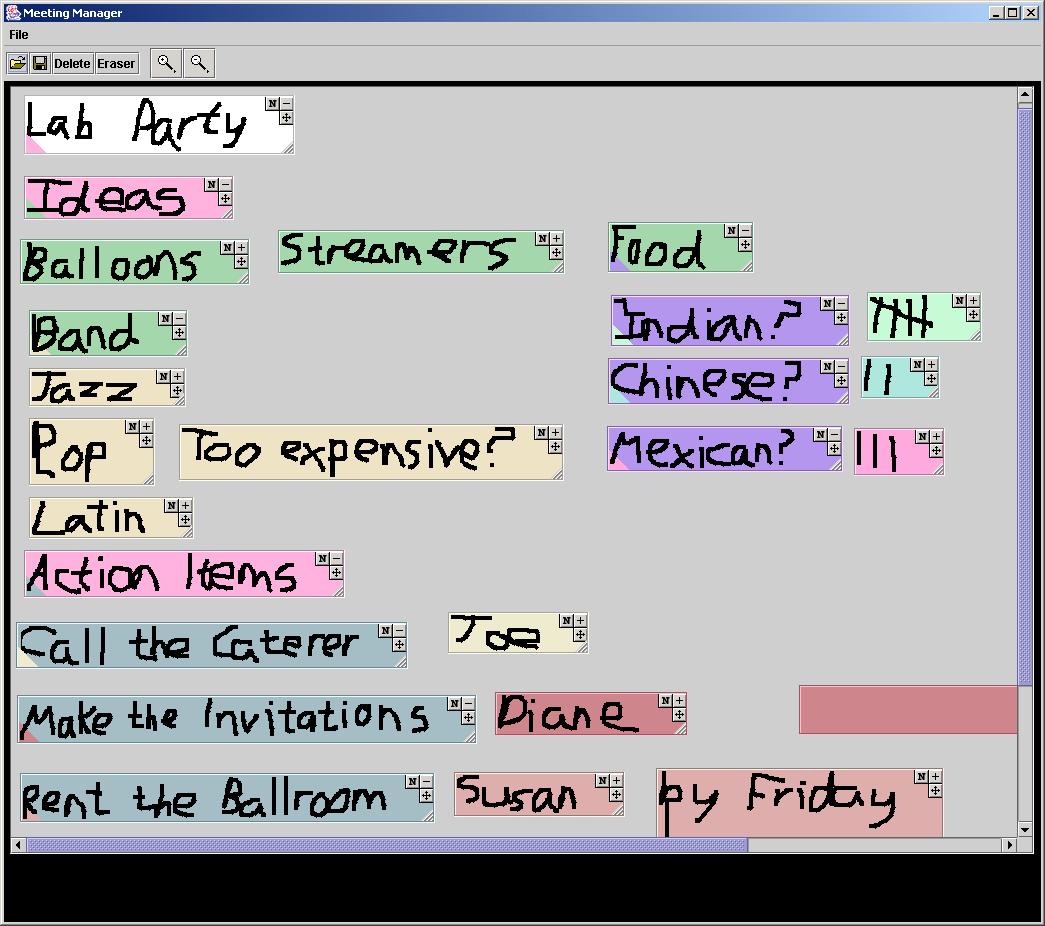

The declining cost of cameras and whiteboard capture tools have made the "electronic meeting" realizable. We are developing the eFacilitator, a tool to allow a recorder or facilitator to take notes on an electronic whiteboard. Besides offering the full flexibility of a regular whiteboard, the eFacilitator allows the recorder to move notes around, shrink or expand them, minimize them, organize them into a hierarchy, and store them where they can be accessed off-line. Furthermore, each meeting note will be time-stamped and indexed into the meeting's video record. Thus, a user can quickly browse through a meeting record, find the items he or she wants, and view the full interaction exactly as it took place. The eFacilitator can be downloaded here.

In collaboration with the Design Rationale Group (DRG) we are working on a system for capturing and indexing software design meetings. The system combines our work on meeting facitlitation and capture with DRG's work on Tahuti, a system for understanding hand-drawn UML sketches.

During software design meetings, designers sketch object-oriented software tools, including new agent-based technologies for the Intelligent Room, by sketching UML-type designs on a white-board. To capture the design meeting history, our meeting capture system uses available audio, video, and screen capture services to capture the entire design meeting. However, finding a particular moment of the design history video and audio records can be cumbersome without a proper indexing scheme. To detect, index, and timestamp significant events in the design process, Tahuti records, recognizes, and understands the UML-type sketches drawn during the meeting. These timestamps can be mapped to particular moments in the captured video and audio, aiding in the retrieval of the captured information.

A tool capable of quickly and effectively visualizing the content of a meeting has been long sought and widely researched. Such a tool should innocuously record the progress of a meeting and then show its content in a manner that encapsulates the format of the meeting while providing tools that facilitate in analysis.

MeetingView seeks to encapsulate these virtues by recording meetings or conversations in an intelligent space and presenting them in a way that emphasizes the time and source sensitive aspects of the information being conveyed. Bridging the EWall and Intelligent Room groups, MeetingView aims to use the rich recording resources intelligent spaces provide in order to unobtrusively gather information concerning a discussion. The future goal is for the room to be able to recognize the speaker and transcribe what he/she is saying. This information is then fed into a EWall View interface (similar to the NewsView tool) for display. Part of developing MeetingView will focus on developing a more robust EWall View tool (agent) that can be used for a larger range of applications.