Kismet is an autonomous robot designed for social interactions with

humans and is part of the larger

An infant's emotions and drives play an important role in generating

meaningful interactions with the caretaker. These interactions

constitute learning episodes for new communication behaviors. In

particular, the infant is strongly biased to learn communication

skills that result in having the caretaker satisfy the infant's

drives. The infant's emotional responses provide important cues which

the caretaker uses to assess how to satiate the infant's drives, and

how to carefully regulate the complexity of the interaction. The

former is critical for the infant to learn how its actions influence

the caretaker, and the later is critical for establishing and

maintaining a suitable learning environment for the infant.

An infant's motivations are vital to regulating social interactions

with his mother. Soon after birth, an infant is able to display a

wide variety of facial expressions, and responds to events in the

world with expressive cues that his mother can read, interpret, and

act upon. She interprets them as indicators of his internal state

(how he feels and why), and modifies her actions to promote his well

being. For example, when he appears content she tends to maintain the

current level of interaction, but when he appears disinterested she

intensifies or changes the interaction to try to re-engage him. In

this manner, the infant can regulate the intensity of interaction with

his mother by displaying appropriate emotive cues. The mother

instinctively reads her infant's expressive signals and modifies her

actions in an effort to maintain a level of interaction suitable for

him.

For Kismat, an important function for its motivational system is

not only to establish appropriate interactions with the caretaker, but

to also to regulate their intensity so that Kismet is neither

over-whelmed nor under-stimulated by them. When designed properly,

the intensity of Kismet's expressions provide appropriate cues for the

caretaker to increase the intensity of the interaction, tone it down,

or maintain it at the current level. By doing so, both parties can

modify their own behavior and the behavior of the other to maintain

the intensity of interaction that Kismet requires to behave

adeptly.

The use of emotional expressions and gestures facilitates and biases

learning during social exchanges. Parents take an active role in

shaping and guiding how and what infants learn by means of

scaffolding. As the word implies, the parent provides a supportive

framework for the infant by manipulating the infant's interactions

with the environment to foster novel abilities. Commonly, scaffolding

involves reducing distractions, marking the task's critical

attributes, reducing the number of degrees of freedom in the target

task, providing ongoing reinforcement through expressive displays of

face and voice, and enabling the subject to experience the end or

outcome of a sequence of activity before the infant is cognitively or

physically able of seeking and attaining it for himself.

The emotive cues the parent receives during

social exchanges serve as feedback so the parent can adjust the nature

and intensity of the structured learning episode to maintain a

suitable learning environment where the infant is neither bored nor

over-whelmed.

In addition, during early interactions with his mother, an infant's

motivations and emotional displays are critical in establishing the

foundational context for learning episodes from which he can learn

shared meanings of communicative acts. During early face-to-face

exchanges with his mother, an infant displays a wide assortment of

emotive cues such as coos, smiles, waves, and kicks. At such an early

age, the infant's basic needs, emotions, and emotive expressions are

among the few things his mother thinks they share in common.

Consequently, she imparts a consistent meaning to her infant's

expressive gestures and expressions, interpreting them as meaningful

responses to her mothering and as indications of his internal state.

Curiously, some experiments performed by developmental psychologists

argue that the mother actually supplies most if not all the

meaning to the exchange when the infant is so young. The infant does

not know the significance his expressive acts have for his mother, nor

how to use them to evoke specific responses from her. However,

because the mother assumes her infant shares the same meanings

for emotive acts, her consistency allows the infant to discover

what sorts of activities on his part will get specific responses from

her. Routine sequences of a predictable nature can be built up which

serve as the basis of learning episodes.

Furthermore, it provides a context of mutual expectations.

For example, early cries of an infant elicit various care-giving

responses from his mother depending upon how she initially interprets

these cries and how the infant responds to her mothering acts. Over

time, the infant and mother converge on specific meanings for

different kinds of cries. Gradually the infant uses subtly different

cries (i.e., cries of distress, cries for attention, cries of pain,

cries of fear) to elicit different responses from his mother. The

mother reinforces the shared meaning of the cries by responding in

consistent ways to the subtle variations. Evidence of this phenomena

exists where mother-infant pairs develop communication protocols

different from those of other mother-infant pairs.

An ongoing research goal is to implement these ideas so that Kismet

is biased to learn how its actions influence the caretaker in

order to satisfy its own drives. Toward this end, Kismet is endowed

with a motivational system that works to maintain its drives within

homeostatic bounds and motivates the robot to learn behaviors that

satiate them. Further, Kismet can display a set of emotive

expressions that are easily interpreted by a naive observer as

analogues of the types of emotive expressions that human infants

display. This allows the caretaker to observe Kismet's emotive

expressions and interpret them as communicative acts. She assumes the

robot is trying to tell her which of its needs must be tended to, and

she acts accordingly. This establishes the requisite routine

interactions for the robot to learn how its emotive acts influence the

behavior of the caretaker, which ultimately serves to satiate the

robot's own drives.

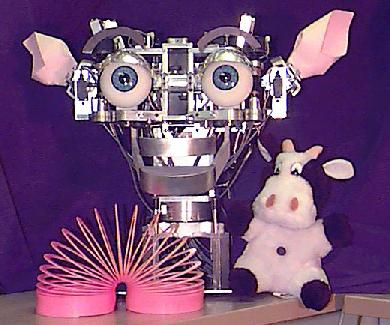

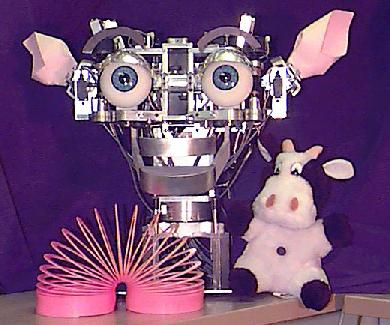

To explore these ideas, Kismet was adapted from an existing active

vision platform commonly used in the Cog Shop. The figure above shows

various stages of development of Kismet. The original head is shown

above at the far left (circa May 1997), and the current state of the

robot is shown at the far right (circa March 1998). The appearance and

degrees of freedom of the robot continues to evolve.

Similar to other active vision systems, there are three degrees of

freedom; each eye has an independent vertical axis of rotation (pan)

and the eyes share a joint horizontal axis of rotation (tilt). Each

eyeball has a color CCD camera embedded within it having a 5.6 mm

focal length. Although this limits the field of view, most social

interactions require a high acuity central area to capture the details

of face-to-face interaction. However, infants have poor visual acuity

which restricts their visual attention to about two feet away --

typically the distance to their mother's face when the infant is being

held (for example, at one month the infant has a visual acuity between

20/400 to 20/600). This choice of camera is a balance between the

need for high resolution and the need for a wide low-acuity field of

view.

Over time, the basic active vision platform has been embellished

with facial features so that Kismet is capable of a wide range of

emotive facial expressions (as shown in the figure above). Currently,

these facial features include eyebrows (each with two

degrees-of-freedom: lift and arch), ears (each with two

degrees-of-freedom: lift and rotate), eyelids (each with one degree of

freedom: open/close), and a mouth (with one degree of freedom:

open/close). The robot is able to show expressions analogous to

anger, fatigue, fear, disgust, excitement, happiness, interest,

sadness, and surprise (shown in the figure below) which are easily

interpreted by an untrained human observer.

Planned extensions to kismet's sensor and motor systems include

stereo microphones for auditory input as well as a synthesized

articulatory model for vocalized outputs.

Kismet's active vision platform is attached to a parallel network of

digital signal processors (Texas Instruments TMS320C40), as shown in

the figure above. The DSP network serves as the sensory

processing engine and implements the bulk of the robot's perception

and attention systems. Each node in the network contains one

processor with the option for more specialized hardware for capturing

images, performing convolution quickly, or displaying images to a VGA

display. Nodes may be connected with arbitrary bi-directional

hardware connections, and distant nodes may communicate through

virtual connections. Each camera is attached to its own frame

grabber, which can transmit captured images to connected nodes.

A pair of Motorola 68332-based microcontrollers are also connected

to Kismet. One controller implements the motor system for driving

the robot's facial motors. The second controller implements the

motivational system (emotions and drives) and the behavior

system. This node receives pre-processed perceptual information from

the DSP network through a dual-ported RAM, and converts this

information into a behavior-specific percept which is then fed into

the rest of the behavior engine.

A framework for Kismet's behavior engine is shown to the right. The

organization and operation of this framework is heavily influenced by

concepts from psychology, ethology, and developmental psychology, as

well as the applications of these fields to robotics as outlined in

"Alternative Essenceses of

Intelligence". The system architecture consists of five

subsystems: the perception system, the motivation system,

the attention system, the behavior system, and the

motor system, an elaborated version from that presented in previous

work, "A Motivational

System for Regulating Human-Robot Interaction". The perception system

extracts salient features from the world, the motivation system

maintains internal state in the form of ``drives'' and ``emotions'',

the attention system determines saliency based upon perception and

motivation, the behavior system implements various types of behaviors

as conceptualized by the theories of Tinbergen and Lorenz, and the

motor system realizes these behaviors as facial expressions and other

motor skills.

A series of experiments were performed with Kismet using a specific

implementation of the behavior engine framework.

The total system consists of three drives: fatigue,

social, and stimulation; three consummatory behaviors:

sleep, socialize, and play; two visually-based

percepts: ``face'' and ``non-face''; five emotions: anger,

disgust, fear, happiness, sadness; two expressive

states: tiredness and interest, and their corresponding facial

expressions.

A more detailed schematic for the ``social'' circuit is shown

below. The ``fatigue'' circuit and the ``stimulation'' circuit follow

a similar structure. See "Infant-like Social Interactions

Between a Robot and a Human Caretaker" for an in depth

presentation.

Each experiment involved a human interacting with Kismet either

through direct face-to-face interaction, by waving a hand at the

robot, or using a toy to play with the robot. Two toys were used:

a small plush black and white cow and

an orange plastic slinky. The perceptual system

classifies these interactions into two classes: ``face stimuli'' and

``non-face stimuli''. The face detection routine classifies both

the human face and the face of the plush cow as face stimuli, while

the waving hand and the slinky are classified as non-face stimuli.

Additionally, the motion generated by the object gives a rating of the

stimulus intensity. The robot's facial expressions reflect its

ongoing motivational state (i.e. it's mood) and provides the human

with visual cues as to how to modify the interaction to keep the

robot's drives within homeostatic ranges.

In general, as long as all the robot's drives remain within their

homeostatic ranges, the robot displays ``interest''. This cues the

human that the interaction is of appropriate intensity. If the human

engages the robot in face-to-face contact while its drives are within

their homeostatic regime, the robot displays ``happiness''. However,

once any drive leaves its homeostatic range, the robot's ``interest''

and/or ``happiness'' wane(s) as it grows increasingly distressed. As

this occurs, the robot's expression reflects its distressed state.

This visual cue tells the human that all is not well with the robot,

whether the human should switch the type of stimulus, and whether the

intensity of interaction should be intensified, diminished or

maintained at its current level.

For all of the experiments, data was recorded on-line in

real-time during interactions between a human and the robot. The data

plots below show the activation levels of the appropriate emotions,

drives, behaviors, and percepts. Emotions are always plotted together

with activation levels ranging from 0 to 2000. Percepts, behaviors,

and drives are often plotted together. Percepts and behaviors have

activation levels that also range from 0 to 2000, with higher values

indicating stronger stimuli or higher potentiation respectively.

Drives have activations ranging from -2000 (the over-whelmed extreme)

to 2000 (the under-whelmed extreme).

This plot shows how Kismet's internal state responds to varying

intensities of face-to-face contact. Before the run begins, the robot

is not shown any faces so that the social drive lies in the

lonely regime and the robot displays an expression of

``sadness''. At t=10 the experimenter makes face-to-face

contact with the robot. From 10 >= t >= 58 the face stimulus is

within the desired intensity range. This corresponds to small head

motions, much like those made when engaging a person in

conversation. As a result, the social drive moves to the

homeostatic regime, and a look of ``interest'' and ``happiness''

appear on the robot's face. From 60 >= t >= 90 the experimenter

begins to sway back and forth in front of the robot. This corresponds

to a face stimulus of over-whelming intensity, which forces the

social drive into the asocial regime. As the drive

intensifies toward a value of -1800, first a look of ``disgust''

appears on the robot's face, which grows in intensity and is

eventually blended with ``anger''. From 90 >= t >= 115 the

experimenter turns her face away so that it is not detected by the

robot. This allows the drive to recover back to the homeostatic

regime and a look of ``interest'' returns to the robot's face. From

115 >= t >= 135 the experimenter re-engages the robot in

face-to-face interaction of acceptable intensity and the robot, and

the robot responds with an expression of ``happiness''. From 135 >=

t >= 170 the experimenter turns away from the robot, which causes

the drive to return to the lonely regime and redisplay

``sadness''. For t >= 170 the experimenter re-engages the robot

in face-to-face contact, which leaves the robot in an ``interested''

and ``happy'' state at the conclusion of the run.

The results from the rest of the aforementioned experiments can be

found in "Infant-like Social

Interactions Between a Robot and a Human Caretaker". A sampling of

video clips from similar experiments is available in the next section.

All of the video clips are recorded at a resolution of 320 by 240.

The video clips are available in two formats (as Quicktime movies and

as MPEG's), and at two frame rates (30 frames per second and 15 frames

per second). We suggest that you start by viewing the 15 frames per

second clips, since they are smaller and quicker to download.

All video clips are Copyright, 1997, by the Cog Shop, MIT Artificial

Intelligence Laboratory, Massachusetts Institute of Technology. These

clips may not be distributed, published, or rebroadcast without prior

written consent.

Here is some 1998 footage showing Kismet engaged in social

interaction with Cynthia Breazeal(Ferrell). Kismet is attending to

the motion of her face or to the motion of other stimuli, and providing

emotive cues to regulate the intensity of interaction.

Support for this research was provided by a MURI grant under the

Office of Naval Research contract N00014--95--1--0600. Early designs

and implementations of the motivational system took place during a

visiting appointment at the Santa Fe Institute.

The Importance of Regulating Social Interactions

En Route to Learning in a Social Context

The Robotic Platform

![[Kismet: May 1997]](headSmall.jpg)

![[Kismet: Sept 1997]](SFI_interestBWsmall.jpg)

![[Kismet: Feb 1997]](NoMouthBWsmall.jpg)

![[Kismet: March 1998]](SurpriseKismetBWsmall.jpg)

![[Kismet's facial expressions]](collage-label.jpg)

Computational Hardware

![[Kismet's computational hardware]](hardware.jpg)

The Software System

![[Behavior engine architecture]](framework.jpg)

Early Experiments in Regulating Social Interaction

![[social drive circuit]](social-circuit.jpg)

Results from Regulating the Intensity of Face-to-Face

Interaction

![[face-to-face interaction experiments]](face-color.jpg)

Kismet video clips

Footage from a Variety of Social Interaction Experiments

Face-to-face Interaction

This clip shows

how Kismet responds when engaged in face-to-face contact with

Cynthia. Kismet becomes more asocial (as shown by a disgusted

expression) when Cynthia moves too much and over-stimulates Kismet.

However, Kismet responds positively to an appropriate

amount of stimulation.Interaction with a Slinky

This clip shows how Kismet responds to varying amounts of slinky

motion. Kismet becomes more distressed (shown by an expression of

fear) when Cynthia moves the slinky too vigorously, causing Kismet to be

over-stimulated. However, Kismet likes small slinky motions.Over-stimulation with a Stuffed Toy

This

clip shows how Kismet responds after being over-stimulated for an

extended period of time. Because Cynthia refuses to engage Kismet at a

suitable level of intensity and continues to wave the stuffed toy

vigorously in front of Kismet's face, Kismet must terminate the

interaction so it can restore itself to a state of homeostatic

balance. To do so, Kismet shuts its eyes and goes to sleep. As it

sleeps, all of its drives are restored to the balanced regieme. Once

this occurs, Kismet awakens and is ready to resume interaction.

Related Publications