High-Resolution Mapping and Modeling of

Multi-Floor Architectural Interiors

MIT9904-20

Progress Report: July 1, 1999–December 31, 1999

Seth Teller

Project Overview

In our initial proposal, we proposed to extend and complement our successful automated mapping system for exterior urban spaces, to incorporate mapping capability for interior spaces. We proposed to deploy a rolling sensor equipped with laser range-finder, high-resolution digital camera, and positioning instrumentation (wheel encoders and precise inertial measurement system) to allow acquisition and registration of dense color-range observations of interior environments. We planned to direct the sensor package by remote radio control. We planned to incorporate positioning instrumentation to track both horizontal motion (e.g. along hallways and through rooms), and vertical motion (e.g., up and down wheelchair ramps, and while inside elevators).

We proposed to acquire the following instrumentation: a Nomadics rolling robot; a Canon Optura digital video camera; a K2T scanning laser range-finder; a GEC Marconi inertial measurement unit; and set of Pacific Crest radio modems. We also proposed support for one PhD student, one MEng, and two full-time UROPs. At the end of 1999 we expected to demonstrate the integrated sensor, and data collected from several floors of Technology Square (including LCS and the AI Lab), with geometry and texture data captured to better than 10cm resolution.

Progress Through December 1999

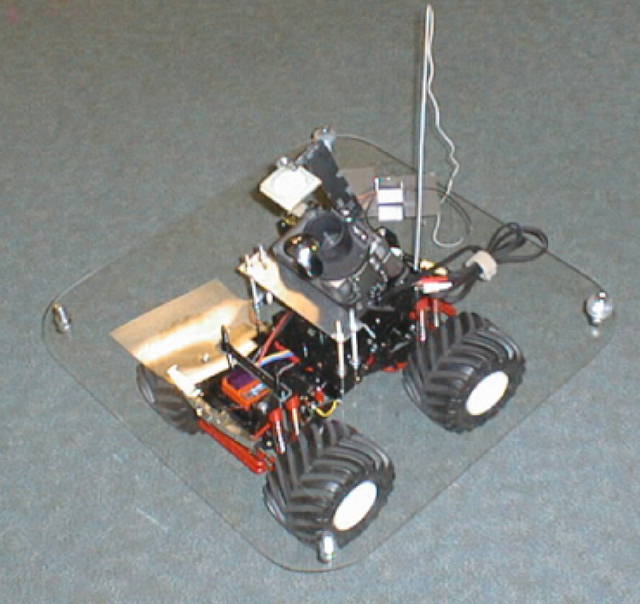

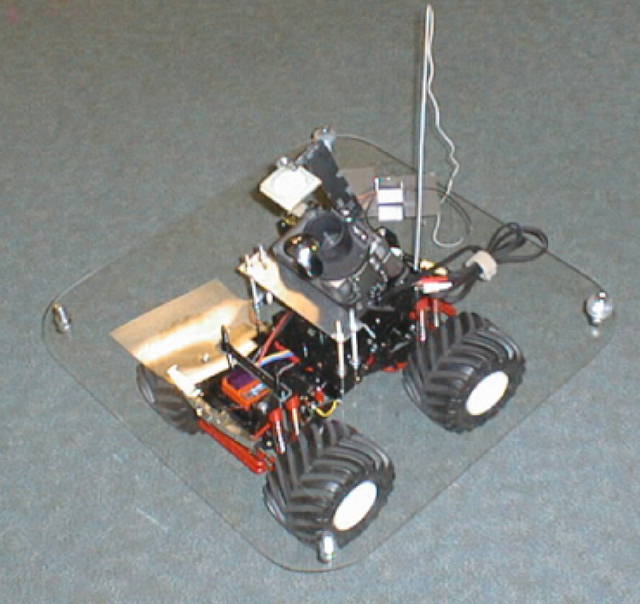

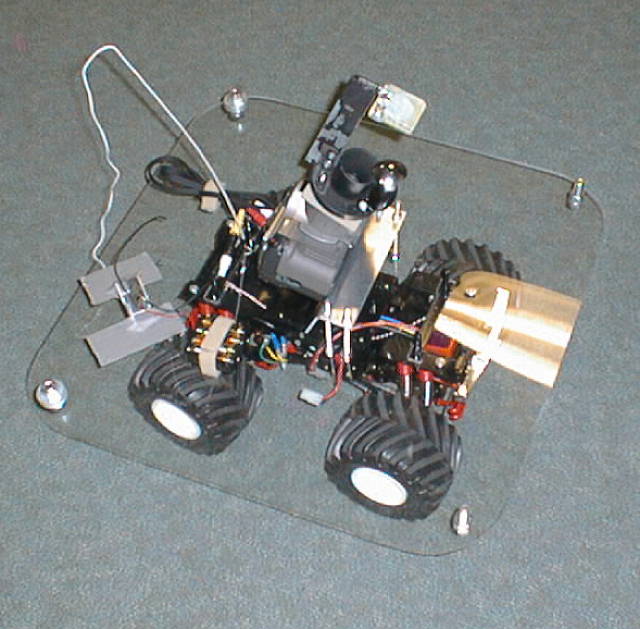

We have built a prototype indoor acquisition system (pictured below) consisting of the following components:

Radio-controlled vehicle. This is a small (50 x 50 x 50 cm) wheeled platform with electric motor, spring-based suspension system, and radio-controlled steering and throttle.

Omni-directional optics. This is a catadioptric mirror manufactured by CycloVision, Inc. The mirror can image a 215-degree field of view. Software is included to extract from this field of view any sub-rectangle corresponding to a frame camera.

Digital video recorder. This is a Canon Optura DVR, which we have merged with the CycloVision optics to produce a functioning recording system for Omni-directional imagery. All mounting hardware was custom designed and built at MIT.

Television transmitter. We use a 900 MHz COTS transmitter to send a live version of the video signal back to the base station for monitoring.

Wheel encoders for odometry. A configuration of magnets, counters, and interface hardware allows us to count individual wheel revolutions using on-board software. This capability is under development.

Power source. A configuration of COTS batteries serves to power all of the above components.

We have entered a collaboration with Prof. John Leonard of the Ocean Engineering Department to apply our techniques to higher-quality data gathered with an Animatics autonomous rolling mobile robot. This robot has higher-quality control and odometry software than our current platform. We expect to begin work with this new platform during the Spring of 2000.

Using our existing platform, we have acquired an omni-directional color video stream along a path through the second floor of Technology Square:

Here is an example image acquired by the Rover:

The MPEG encoding of the sequence (100Mb) can be found at:

http://graphics.lcs.mit.edu/~bahamas/rover/Test_001SQ.mpg

Research Plan for the Next Six Months

We are investigating a number of techniques for the acquisition system over the next 6-12 months. First is the use of spherical optical flow (ego-motion estimation from dense texture) and feature tracking (ego-motion estimation from edge and corner features). Second is the incorporation of an inertial sensor, as originally planned. Third is the addition of a scanning laser-range finder, as originally planned, and the use of range data both for ego-motion estimation and for structure acquisition. Fourth is the fusion of color samples (acquired from the video camera) and range samples (acquired from the range-finder) to produce structure samples of color and depth. Fifth is the development of a robust navigation filter, analogous to the filter implemented by the Argus sensor, which combines navigation estimates from odometry sensors, inertial sensors, sparse and dense optical processing, and range samples to produce a single robust estimate of the Rover's pose in a three-dimensional coordinate system.