| |

| Principal

Investigators: |

| Neal

Checka |

| David

Demirdjian |

|

|

| The

Problem: |

| The

goal of this project is to design and build a

multi-person tracking system using a network of

stereo cameras. Our system, which stands in an

ordinary conference room is able to track people,

estimate their trajectories as well as different

characteristics (e.g. size, posture). |

|

| Motivation: |

| Systems

which can track and understand people have a wide

variety of commercial applications. It is predicted

that computers of the future will interact more

naturally with humans than they do now. Instead of

the desktop computer paradigm with humans

communicating by typing, computers of the future

will be able to understand human speech and

movements. Our system demonstrates the capabilities

of a solely vision-based system for these ends. |

| |

| Our

Approach: |

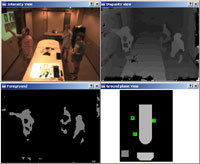

| We

have developed a system that can perform dense, fast

range-based tracking with modest computational

complexity. We apply ordered disparity search

techniques to prune most of the disparity search

computation during foreground detection and

disparity estimation, yielding a fast,

illumination-insensitive 3D tracking system. When

tracking people, we have found that rendering an

orthographic vertical projection of detected

foreground pixels is a useful model. |

|

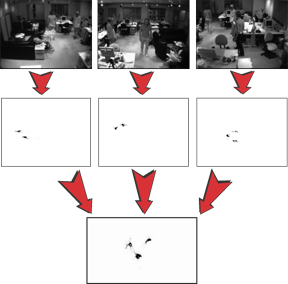

A

”plan view” image facilitates correspondence in

time since only 2D search is required. Previous

systems would segment foreground data into regions

prior to projecting into a plan-view, followed by

region-level tracking and integration, potentially

leading tosub-optimal segmentation and/or object

fragmentation. Instead, we develop a technique that

altogether avoids any early segmentation of

foreground data. We merge the plan-view images from

each view and estimate over time a set of

trajectories that best represents the integrated

foreground density. The figure on the left shows

three people are standing in a room, though not all

are visible to each camera. Foreground points are

projected onto a ground plane. Ground plane points

from all cameras are then superimposed into a single

data set before clustering the points to find person

locations.

|

|

Detecting

locations of users in a room using multiple views

and plan-view integration.

|

| Trajectory

estimation is performed using a... |

| Future

Work: |

|

| Publications: |

| 1.

Trevor Darrell, David Demirdjian, Neal Checka, Pedro

Felzenswalb, Plan-view Trajectory Estimation with

Dense Stereo Background Models, Proceedings of

the International Conference on Computer Vision,

2001 |

|

| Demos: |

| Demo

1 (9.85 MB): Shows server

module detecting locations of people in a room using

multiple views. The posture of a person is color

coded (sitting = red, standing = green). An active

camera tracks the tallest person in the room. |